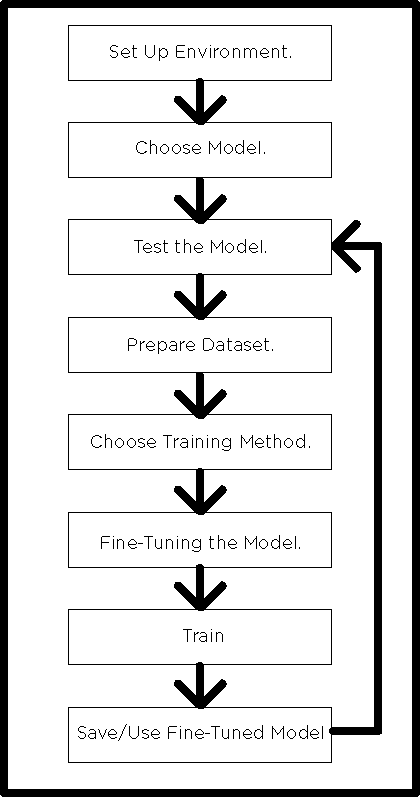

I did enroll in many LLM courses for confirm this. I see that I needed to understand step by step more than show all the step, below is what summary on each step.

Step 1: Set Up Your Environment.

Choose your hardware

Install dependencies

Set up GPU acceleration (CUDA) (optional). If you use Mac M series or Arm based this may be not possible.

Step 2: Choose Your Model.

There were a lot of Pretrained model that you can choose, choose both model and parameters size (B).

eg. LLaMA (Meta), Mistral / Mixtral, Falcon, Gemma (Google), Phi (Microsoft).

Step 3: Test the Model (Inference Only).

Step 4: Prepare Your Dataset (for training).

Step 5: Choose Training Method

eg. Full fine-tuning, quantized, Parameter-Efficient Fine-Tuning (PEFT).

Step 6: Fine-Tuning the Model

Step 7: Train (by the dataset in step 4).

Step 8: Save + Use Your Fine-Tuned Model

And then back to Step 3: Test the Model (Inference Only).

You will continue to do prepare new Data set (step 4) and continue to step 8 and back to step 3 again until the result suit you.